Introduction

Hello, wonderful readers of ‘AI Research News’! This is Emily Chen, your guide in the exciting landscape of artificial intelligence research. Today, I’m going to whisk you away to the world of image manipulation, where the line between reality and fantasy gets intriguingly blurry.

Imagine having a photograph of a puppy, and you want to see what it looks like with its mouth wide open. Instead of painstakingly drawing in an opened mouth, what if you could just drag a point on the image to open that puppy’s mouth as if it were a puppet? Sounds like sci-fi, doesn’t it? Well, that’s what the cutting-edge technology of DragGAN is doing, and let me tell you, it’s turning heads in the AI community!

DragGAN: The Magician’s Wand of Image Editing

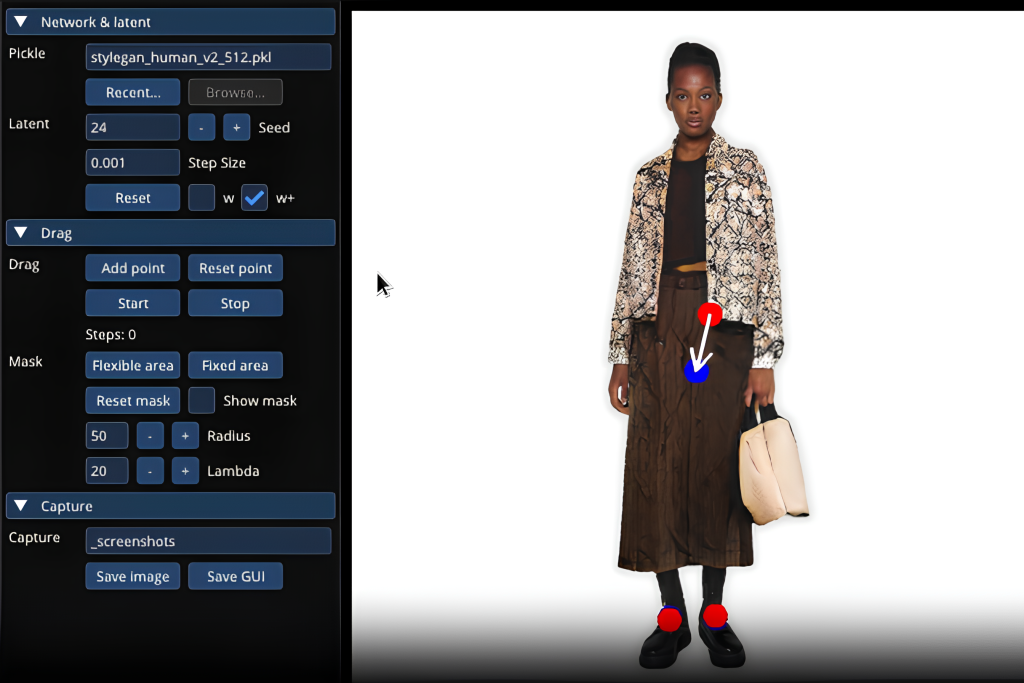

DragGAN stands for – and bear with me on this – ‘Directly manipulating image Regions through handle points using a Generative Adversarial Network’. A mouthful, right? Hence the snappy ‘DragGAN’. It’s an interactive approach for intuitive point-based image editing, where the user can modify images with ease, accuracy, and real-time feedback.

Imagine having a magic wand that allows you to change an image’s attributes with a simple flick! That’s what the researchers behind DragGAN have conjured up. By harnessing the power of pre-trained GANs (Generative Adversarial Networks, for the uninitiated), they’ve developed a way to edit images that not only precisely follow your input but also ensure the results still look surprisingly realistic.

The Showdown: DragGAN vs. The Rest

The most impressive part of the DragGAN is that it outperforms other image manipulation methods in significant ways. For instance, it leaves its predecessor, UserControllableLT, in the dust, providing superior image quality and tracking accuracy. In terms of image reconstruction, DragGAN even surpasses models like RAFT and PIPs. What does this mean for us non-techies? Well, your photos are going to look a whole lot better and more natural than ever before!

Peek Under the Hood of DragGAN

The magic behind DragGAN lies in its clever use of GANs, specifically StyleGAN, which are known for their ability to generate impressively realistic images. DragGAN adds a new spin to this by enabling users to tweak these images in ways that seem downright magical.

What sets DragGAN apart is its point-based editing process. This approach lets users choose specific points, or ‘handle points,’ on an image and directly manipulate them to achieve the desired effect. For instance, you could drag a point on the jawline of a portrait to adjust the shape of the face.

Users can manipulate specific points on an image for real-time, dynamic adjustments. This revolutionary method could redefine digital art, animation, and photo restoration, while also necessitating the importance of ethical use in respecting privacy.

The Human Touch: Social Implications of DragGAN

Now, you might be wondering: all this sounds fantastic, but what are the real-world implications of DragGAN? As with any technology, it’s essential to consider the social impacts.

Sure, DragGAN could revolutionize areas such as digital art, animation, and even photo restoration, offering tools that are both powerful and easy to use. However, the power to manipulate images so realistically and seamlessly also comes with potential risks.

The technology could be misused to create misleading or harmful images, such as altering a person’s pose, expression, or shape without their consent. Therefore, it’s crucial to use DragGAN responsibly, adhering to privacy regulations and ethical guidelines. After all, with great power comes great responsibility!

A Look Into The Future

What’s next on the horizon for DragGAN? Well, the researchers are planning to extend their point-based editing to 3D generative models. Imagine being able to manipulate 3D models with the same ease and precision as 2D images. Talk about a game-changer!

In the fast-paced, ever-evolving world of AI, DragGAN stands as a testament to the creativity and innovative spirit of researchers. It reminds us that we’re only scratching the surface of what’s possible, one pixel at a time.

Conclusion

Until next time, this is Emily, signing off with a reminder to keep your eyes open to the magic of technology around us!

Project Source Code

The project source code will be made available in June 2023 here: https://github.com/XingangPan/DragGAN

Original Research Paper

You can find the original research paper here: https://arxiv.org/pdf/2305.10973.pdf

Project Page with Sample Videos

Visit the project page for sample videos and more information about DragGAN: https://vcai.mpi-inf.mpg.de/projects/DragGAN/